Roblox Repeatedly Sued for Harm to Children and Teens

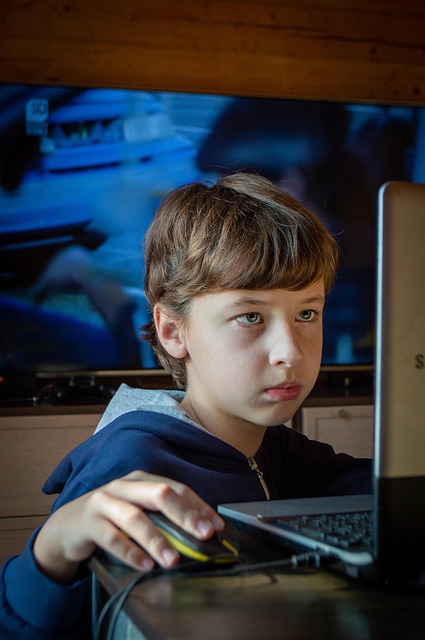

A string of lawsuits has been filed against Roblox alleging that the platform allows child grooming and exploitation. In November, it was reported that the company faced more than 35 lawsuits. Many of these lawsuits have been brought by parents. However, states have also brought legal action against Roblox. Some of these lawsuits allege that children and teens are repeatedly exposed to sexually explicit content, exploitation, and grooming on the platform; some allege that the platform enables sexual predators to contact children even when they aren’t connected. In one case, a perpetrator, had started communications by posing as a 16-year-old, and moved communication off of Roblox and onto Discord, where he coerced the child with Robux gift cards in exchange for explicit videos and images and tried to arrange an in-person meeting, even threatening the child that he knew his home address.

A string of lawsuits has been filed against Roblox alleging that the platform allows child grooming and exploitation. In November, it was reported that the company faced more than 35 lawsuits. Many of these lawsuits have been brought by parents. However, states have also brought legal action against Roblox. Some of these lawsuits allege that children and teens are repeatedly exposed to sexually explicit content, exploitation, and grooming on the platform; some allege that the platform enables sexual predators to contact children even when they aren’t connected. In one case, a perpetrator, had started communications by posing as a 16-year-old, and moved communication off of Roblox and onto Discord, where he coerced the child with Robux gift cards in exchange for explicit videos and images and tried to arrange an in-person meeting, even threatening the child that he knew his home address.

Last year in December, a thirteen-year-old took her own life, and a lawsuit was filed against the gaming platforms Roblox and Discord. The teenager who died by suicide began playing Roblox when she was eight years old and experienced a downturn in her mental health. She recorded the countdown to her death in her diary. If your child was harmed by Roblox or Discord, you should call the seasoned Chicago-based product liability lawyers of Moll Law Group. Billions have been recovered in cases with which we’ve been involved.

Call Moll Law Group About Whether Your Claim is Viable

According to the lawsuit involving the 13-year-old, Roblox allows experiences that center on Jeffrey Epstein; the 1999 Columbine school shooting; and shootings of minorities with the words “ethnic cleansing” onscreen. The filing also claims that Discord permits content related to self-harm and that adults have used the platform to blackmail or ask teenagers to harm themselves or commit suicide in front of the phone or computer camera.

Roblox’s 2023 yearly report revealed that it had 68.5 million active daily users. Of these 21% were under 9 years old and 16% were ages 13-16. In 2024, the company reported $3.6 billion in revenue. Roblox has claimed it filters text chat in several languages to block inappropriate content such as bullying, extremism, violence, and sexual content. However, the lawsuits allege otherwise.

Most recently, Roblox announced AI safety measures in the form of facial age checks, among other things, but it remains to be seen whether they have any efficacy. In January 2026, Roblox’s new safety efforts will ask users to use the platform’s Facial Age Estimation technology. The platform app and the phone or computer camera will be used to scan faces of users, or they will be asked to give a valid form of ID to Roblox to verify their ages. Roblox plans to have the chat feature in the platform automatically turned off by default except when a child’s parent gives consent after an age check.

The new efforts cannot bring loved ones back. If your child was harmed on Roblox or Discord, call the seasoned Chicago-based product liability lawyers of Moll Law Group to determine whether you have viable grounds to sue for damages. When our firm can prove a manufacturer’s liability for injuries or wrongful death, we may be able to recover damages on behalf of our clients. Compensation for injuries may include the cost of medical expenses, out-of-pocket costs, pain and suffering, and mental anguish. We dedicate ourselves to fighting for injured consumers around the country. Complete our online form or call us at 312.462.1700.

Illinois Injury and Mass Tort Lawyer Blog

Illinois Injury and Mass Tort Lawyer Blog